Saturday, February 7, 2026

Introducing Rooms: Live Audio on Mention

Introducing Rooms: Live Audio on Mention

Introducing Rooms: Live Audio on Mention

The Biggest Feature Drop in Mention History

Today we are incredibly excited to announce Rooms — live audio rooms built directly into Mention. Think of it like Messenger inside Facebook: Rooms is a core part of the Mention experience, and it also has its own standalone app — Agora, available at agora.mention.earth. This is the single largest feature we have ever shipped, and it fundamentally changes what Mention is: from a social platform where you read and write, to one where you can talk and listen in real-time.

Rooms lets any user create a live audio room, invite speakers, and broadcast to an unlimited audience of listeners. Think of it as your own podcast studio, town hall, or casual hangout — all inside Mention, or through the dedicated Agora app.

We did not take shortcuts. We built this on top of WebRTC with a self-hosted Selective Forwarding Unit (SFU), running on our own infrastructure. No third-party audio APIs. No usage-based pricing that would force us to limit your conversations. Just raw, low-latency, high-quality audio — from our servers to your ears.

Let us dive into everything.

What Are Rooms?

A Room is a live audio room hosted by a Mention or Agora user. When you create a Room, you become the host. You give it a title, optionally a topic and description, and then you go live. That is it — you are broadcasting.

Other users can join your Room as listeners. They hear everything the speakers say in real-time, with sub-second latency. If a listener wants to speak, they can raise their hand (request to speak), and the host can approve or deny the request.

Once approved, a listener becomes a speaker. Speakers can unmute their microphone and talk to the entire room. The host can also remove speakers at any time, returning them to listener status.

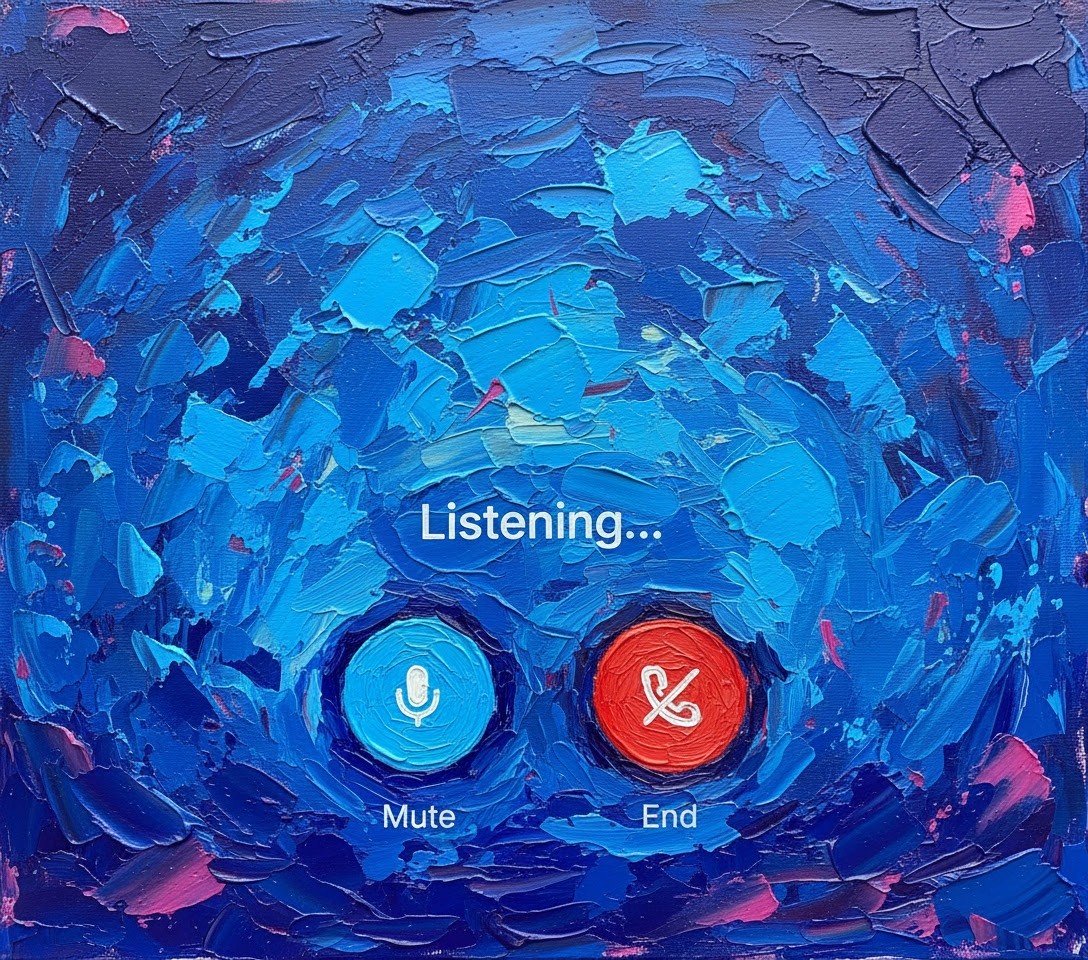

The entire flow is designed to feel natural and effortless:

Create — Tap the "+" button on the Rooms screen. Give your Room a title and topic.

Go Live — Hit "Start Now" and you are broadcasting. A LiveKit room is created on our SFU, and your audio session begins.

Invite and Share — Share your Room. It appears in the Rooms directory for anyone to join.

Manage — Approve or deny speaker requests. Mute yourself. Remove speakers. You are in full control.

End — When you are done, end the Room. The LiveKit room is torn down, Redis state is cleaned up, and the Room is archived.

How It Works Under the Hood

This is where it gets interesting. We built Rooms with a dual-layer architecture that separates the control plane from the media plane.

Layer 1: Socket.IO — The Control Plane

Socket.IO handles everything that is not audio. When you join a Room, your client establishes a WebSocket connection to our backend through the /rooms Socket.IO namespace. This connection manages:

Participant tracking — Who is in the room, their roles (host, speaker, listener), and their mute state

Speaker requests — The raise-hand, approve/deny, role-change flow

Room lifecycle — Start, end, and participant join/leave events

Real-time UI updates — Mute indicators, participant count, speaker list changes

All room state is persisted in Redis, not in-memory. This means if our backend process restarts, your Room survives. It also means we can scale horizontally — multiple backend instances can serve the same Room through Redis pub/sub.

Layer 2: LiveKit SFU — The Media Plane

Audio is handled entirely by LiveKit, an open-source WebRTC Selective Forwarding Unit that we self-host on our own DigitalOcean infrastructure.

When a Room goes live, our backend creates a LiveKit room via the Room Service API. When a client joins, it requests a LiveKit access token from our backend (POST /api/rooms/:id/token). The token encodes the user identity, the room name, and their permissions — can they publish audio? Can they subscribe?

The client then connects directly to the LiveKit SFU over WebRTC. Audio tracks flow peer-to-SFU, not peer-to-peer. This is critical for scalability — in a peer-to-peer model, a room with 50 listeners would require the speaker to upload 50 separate audio streams. With an SFU, the speaker uploads once, and LiveKit distributes to all subscribers.

Here is what LiveKit handles for us:

WebRTC negotiation — ICE candidates, DTLS handshake, codec selection

Echo cancellation and noise suppression — Built into the WebRTC stack

Adaptive bitrate — Audio quality adjusts based on network conditions

Track publish/subscribe — Speakers publish their mic track; listeners subscribe automatically

Bandwidth management — Dynacast ensures we only forward tracks that participants are actually consuming

Why Self-Hosted?

This was never a question for us. At Oxy, we believe your conversations belong to you — not to a third-party provider who might log, analyze, or monetize your audio data. Self-hosting is a direct extension of the values we have held since day one: privacy, independence, and trust.

When you speak in a Room, your voice travels from your device to our SFU and back to the listeners. That is it. No third-party processors in the middle. No audio data leaving our infrastructure. No external company with access to what you say. We do not want to depend on anyone else when it comes to protecting our users, and we refuse to put your privacy in someone else’s hands.

Beyond privacy, self-hosting gives us:

True independence — We do not rely on any external service that could change its terms, raise prices, or shut down. Our infrastructure is ours. If a hosted provider decided to discontinue their service tomorrow, it would not affect a single Room on Mention.

Cost control — Hosted WebRTC services charge per-participant-minute. For a social platform where Rooms can run for hours with dozens of participants, costs would escalate quickly and could force us to limit your conversations. Our self-hosted LiveKit instance runs on our own DigitalOcean infrastructure at a predictable cost.

Full control — We configure everything: room timeouts, codec preferences, port ranges, TLS termination. If we need to tune performance or respond to an issue, we change a config file — not a support ticket. We answer to our users, not to a vendor.

We could have taken the easy route and plugged in a hosted API. But easy and right are not always the same thing. Our users deserve better, and self-hosting is how we deliver on that promise.

Our LiveKit instance runs as a Docker container behind Caddy (for automatic TLS via Let us Encrypt) on a dedicated droplet at livekit.mention.earth. UDP ports 50000-50200 are open for WebRTC media transport, with TCP fallback through the TURN server.

Role-Based Permissions

Rooms has a layered permission system that works across both Socket.IO and LiveKit:

Host

The Room creator. Has full control: can start/end the Room, approve/deny speaker requests, remove speakers, and of course speak. Their LiveKit token grants canPublish: true with canPublishSources: [MICROPHONE].

Speaker

A participant promoted by the host. Can unmute and publish audio. When the host approves a speaker request, two things happen simultaneously:

Socket.IO broadcasts the role change to all participants (UI update)

Our backend calls updateParticipantPermissions() on LiveKit Room Service API, granting the user publish rights in the LiveKit room

This dual update ensures the UI and the media layer stay in sync. The speaker can immediately unmute and start talking.

Listener

The default role. Can hear all speakers but cannot publish audio. Their LiveKit token has canPublish: false. Even if they tried to publish a track client-side, LiveKit would reject it at the server level. Security is enforced server-side, not just in the UI.

Speaker Permission Modes

When creating a Room, the host can choose who is allowed to request to speak:

Everyone — Any participant can raise their hand

Followers — Only users who follow the host can request

Invited Only — Only users explicitly invited by the host can speak

Rooms in Your Feed

Rooms are not isolated in a separate tab. They are woven into the fabric of Mention. When you create a Room, you can attach it to a post — just like you would attach an image, poll, or article.

Your followers will see a Room card in their feed. If the Room is live, the card pulses with a live indicator. Tapping the card joins the Room immediately. If it is scheduled, the card shows the scheduled time.

This was a deliberate design decision. We did not want Rooms to be a feature you have to go looking for. We wanted them to surface naturally in the content you are already browsing. A live Room from someone you follow should feel as natural as seeing their latest post.

The post attachment system supports the full Room lifecycle:

Scheduled — Card shows date/time, acts as an announcement

Live — Card shows participant count, pulsing indicator, "Join" action

Ended — Card shows it has ended (future: will link to recording)

Cross-Platform from Day One

Rooms works on web, iOS, and Android from launch — whether you use it inside Mention or through the standalone Agora app. The LiveKit client SDK handles platform differences:

Web — Uses the browser WebRTC implementation. Remote audio tracks are attached to audio DOM elements for playback. We handle autoplay policies and element cleanup.

iOS and Android — Uses @livekit/react-native with react-native-webrtc under the hood. Audio session management is handled natively — we start the audio session when connecting and stop it on disconnect.

The UI is the same bottom sheet experience across all platforms, built with our existing sheet system. Open a Room, see the speakers, see the listeners, tap to mute, tap to raise your hand. It just works.

Redis-Backed State

In the early prototype, room state lived in a JavaScript Map in the backend process. If the process restarted, all active Rooms lost their participant lists. Not acceptable for production.

We migrated all room state to Redis, which we already use for Socket.IO adapter (multi-instance pub/sub) and caching. The key schema is clean:

room:{roomId} — Room metadata (host, creation time)

room:{roomId}:participants — Hash of userId to role, mute state, join time

room:{roomId}:requests — Hash of pending speaker requests

This gives us atomic operations on participant state, automatic expiry capabilities, and the ability to inspect live room state with redis-cli for debugging. It also means our backend can scale horizontally — any instance can serve any Room.

The Architecture at a Glance

Here is the full picture of how everything fits together:

DigitalOcean Droplet — Runs LiveKit SFU in Docker, with Caddy for TLS. Handles all WebRTC audio transport.

Mention Backend (App Platform) — Token generation, room lifecycle, speaker management, Socket.IO /rooms namespace.

Redis — Room state persistence, Socket.IO adapter for multi-instance support.

Clients (Expo React Native + Web) — Dual connection: Socket.IO for control events, LiveKit SDK for audio tracks.

The separation of concerns is clean. Socket.IO never touches audio data. LiveKit never touches application logic. Redis bridges them by storing the authoritative room state that both layers reference.

Agora: Rooms With Its Own Front Door

Just as Messenger lives inside Facebook and as its own standalone app, Rooms is built into Mention and also exists as Agora — a dedicated app at agora.mention.earth. Same infrastructure, same rooms, same community. Whether you open Rooms inside Mention or launch Agora directly, you are in the same ecosystem. Agora simply gives Rooms a focused experience built entirely around live audio, for users who want to jump straight in.

What We Are Building Next

Rooms is launching today, but we are just getting started. Here is what is on our roadmap:

Recording and Playback — Record your Room and let people listen later. LiveKit supports egress (recording) natively, and we plan to integrate it so hosts can opt into recording. Imagine turning every Room into an on-demand podcast episode.

Live Captions — Real-time transcription powered by AI. Follow along with text even when you cannot listen with audio. This will make Rooms accessible to deaf and hard-of-hearing users, and useful in noise-sensitive environments.

Co-hosting — Invite another user as a co-host who can manage speakers and moderate the room alongside you. Essential for larger Rooms where a single host cannot manage everything alone.

Reactions — Listeners can send emoji reactions that float across the screen. A lightweight way to participate without speaking. Think applause, laughter, and raised hands — all animated in real-time.

Scheduled Rooms with Reminders — Schedule a Room for the future and let your followers set reminders so they do not miss it. We will send push notifications when the Room goes live.

Room Analytics — Detailed stats for hosts: peak listeners, total joins, average listen duration, engagement over time. Understand your audience and optimize your content.

Clips — Highlight and share short audio clips from Rooms. Let your best moments live beyond the live session.

Try It Now

Rooms is available today inside Mention at mention.earth and as the standalone Agora app at agora.mention.earth. Create your first Room, go live, and start talking. We can not wait to hear what you build with it.

If you are a developer interested in how we built this, check out the Oxy ecosystem at oxy.so — all of our infrastructure is built in-house, from the backend services to the real-time audio pipeline.

Welcome to the future of conversation on Mention. Welcome to Rooms.

The Biggest Feature Drop in Mention History

Today we are incredibly excited to announce Rooms — live audio rooms built directly into Mention. Think of it like Messenger inside Facebook: Rooms is a core part of the Mention experience, and it also has its own standalone app — Agora, available at agora.mention.earth. This is the single largest feature we have ever shipped, and it fundamentally changes what Mention is: from a social platform where you read and write, to one where you can talk and listen in real-time.

Rooms lets any user create a live audio room, invite speakers, and broadcast to an unlimited audience of listeners. Think of it as your own podcast studio, town hall, or casual hangout — all inside Mention, or through the dedicated Agora app.

We did not take shortcuts. We built this on top of WebRTC with a self-hosted Selective Forwarding Unit (SFU), running on our own infrastructure. No third-party audio APIs. No usage-based pricing that would force us to limit your conversations. Just raw, low-latency, high-quality audio — from our servers to your ears.

Let us dive into everything.

What Are Rooms?

A Room is a live audio room hosted by a Mention or Agora user. When you create a Room, you become the host. You give it a title, optionally a topic and description, and then you go live. That is it — you are broadcasting.

Other users can join your Room as listeners. They hear everything the speakers say in real-time, with sub-second latency. If a listener wants to speak, they can raise their hand (request to speak), and the host can approve or deny the request.

Once approved, a listener becomes a speaker. Speakers can unmute their microphone and talk to the entire room. The host can also remove speakers at any time, returning them to listener status.

The entire flow is designed to feel natural and effortless:

Create — Tap the "+" button on the Rooms screen. Give your Room a title and topic.

Go Live — Hit "Start Now" and you are broadcasting. A LiveKit room is created on our SFU, and your audio session begins.

Invite and Share — Share your Room. It appears in the Rooms directory for anyone to join.

Manage — Approve or deny speaker requests. Mute yourself. Remove speakers. You are in full control.

End — When you are done, end the Room. The LiveKit room is torn down, Redis state is cleaned up, and the Room is archived.

How It Works Under the Hood

This is where it gets interesting. We built Rooms with a dual-layer architecture that separates the control plane from the media plane.

Layer 1: Socket.IO — The Control Plane

Socket.IO handles everything that is not audio. When you join a Room, your client establishes a WebSocket connection to our backend through the /rooms Socket.IO namespace. This connection manages:

Participant tracking — Who is in the room, their roles (host, speaker, listener), and their mute state

Speaker requests — The raise-hand, approve/deny, role-change flow

Room lifecycle — Start, end, and participant join/leave events

Real-time UI updates — Mute indicators, participant count, speaker list changes

All room state is persisted in Redis, not in-memory. This means if our backend process restarts, your Room survives. It also means we can scale horizontally — multiple backend instances can serve the same Room through Redis pub/sub.

Layer 2: LiveKit SFU — The Media Plane

Audio is handled entirely by LiveKit, an open-source WebRTC Selective Forwarding Unit that we self-host on our own DigitalOcean infrastructure.

When a Room goes live, our backend creates a LiveKit room via the Room Service API. When a client joins, it requests a LiveKit access token from our backend (POST /api/rooms/:id/token). The token encodes the user identity, the room name, and their permissions — can they publish audio? Can they subscribe?

The client then connects directly to the LiveKit SFU over WebRTC. Audio tracks flow peer-to-SFU, not peer-to-peer. This is critical for scalability — in a peer-to-peer model, a room with 50 listeners would require the speaker to upload 50 separate audio streams. With an SFU, the speaker uploads once, and LiveKit distributes to all subscribers.

Here is what LiveKit handles for us:

WebRTC negotiation — ICE candidates, DTLS handshake, codec selection

Echo cancellation and noise suppression — Built into the WebRTC stack

Adaptive bitrate — Audio quality adjusts based on network conditions

Track publish/subscribe — Speakers publish their mic track; listeners subscribe automatically

Bandwidth management — Dynacast ensures we only forward tracks that participants are actually consuming

Why Self-Hosted?

This was never a question for us. At Oxy, we believe your conversations belong to you — not to a third-party provider who might log, analyze, or monetize your audio data. Self-hosting is a direct extension of the values we have held since day one: privacy, independence, and trust.

When you speak in a Room, your voice travels from your device to our SFU and back to the listeners. That is it. No third-party processors in the middle. No audio data leaving our infrastructure. No external company with access to what you say. We do not want to depend on anyone else when it comes to protecting our users, and we refuse to put your privacy in someone else’s hands.

Beyond privacy, self-hosting gives us:

True independence — We do not rely on any external service that could change its terms, raise prices, or shut down. Our infrastructure is ours. If a hosted provider decided to discontinue their service tomorrow, it would not affect a single Room on Mention.

Cost control — Hosted WebRTC services charge per-participant-minute. For a social platform where Rooms can run for hours with dozens of participants, costs would escalate quickly and could force us to limit your conversations. Our self-hosted LiveKit instance runs on our own DigitalOcean infrastructure at a predictable cost.

Full control — We configure everything: room timeouts, codec preferences, port ranges, TLS termination. If we need to tune performance or respond to an issue, we change a config file — not a support ticket. We answer to our users, not to a vendor.

We could have taken the easy route and plugged in a hosted API. But easy and right are not always the same thing. Our users deserve better, and self-hosting is how we deliver on that promise.

Our LiveKit instance runs as a Docker container behind Caddy (for automatic TLS via Let us Encrypt) on a dedicated droplet at livekit.mention.earth. UDP ports 50000-50200 are open for WebRTC media transport, with TCP fallback through the TURN server.

Role-Based Permissions

Rooms has a layered permission system that works across both Socket.IO and LiveKit:

Host

The Room creator. Has full control: can start/end the Room, approve/deny speaker requests, remove speakers, and of course speak. Their LiveKit token grants canPublish: true with canPublishSources: [MICROPHONE].

Speaker

A participant promoted by the host. Can unmute and publish audio. When the host approves a speaker request, two things happen simultaneously:

Socket.IO broadcasts the role change to all participants (UI update)

Our backend calls updateParticipantPermissions() on LiveKit Room Service API, granting the user publish rights in the LiveKit room

This dual update ensures the UI and the media layer stay in sync. The speaker can immediately unmute and start talking.

Listener

The default role. Can hear all speakers but cannot publish audio. Their LiveKit token has canPublish: false. Even if they tried to publish a track client-side, LiveKit would reject it at the server level. Security is enforced server-side, not just in the UI.

Speaker Permission Modes

When creating a Room, the host can choose who is allowed to request to speak:

Everyone — Any participant can raise their hand

Followers — Only users who follow the host can request

Invited Only — Only users explicitly invited by the host can speak

Rooms in Your Feed

Rooms are not isolated in a separate tab. They are woven into the fabric of Mention. When you create a Room, you can attach it to a post — just like you would attach an image, poll, or article.

Your followers will see a Room card in their feed. If the Room is live, the card pulses with a live indicator. Tapping the card joins the Room immediately. If it is scheduled, the card shows the scheduled time.

This was a deliberate design decision. We did not want Rooms to be a feature you have to go looking for. We wanted them to surface naturally in the content you are already browsing. A live Room from someone you follow should feel as natural as seeing their latest post.

The post attachment system supports the full Room lifecycle:

Scheduled — Card shows date/time, acts as an announcement

Live — Card shows participant count, pulsing indicator, "Join" action

Ended — Card shows it has ended (future: will link to recording)

Cross-Platform from Day One

Rooms works on web, iOS, and Android from launch — whether you use it inside Mention or through the standalone Agora app. The LiveKit client SDK handles platform differences:

Web — Uses the browser WebRTC implementation. Remote audio tracks are attached to audio DOM elements for playback. We handle autoplay policies and element cleanup.

iOS and Android — Uses @livekit/react-native with react-native-webrtc under the hood. Audio session management is handled natively — we start the audio session when connecting and stop it on disconnect.

The UI is the same bottom sheet experience across all platforms, built with our existing sheet system. Open a Room, see the speakers, see the listeners, tap to mute, tap to raise your hand. It just works.

Redis-Backed State

In the early prototype, room state lived in a JavaScript Map in the backend process. If the process restarted, all active Rooms lost their participant lists. Not acceptable for production.

We migrated all room state to Redis, which we already use for Socket.IO adapter (multi-instance pub/sub) and caching. The key schema is clean:

room:{roomId} — Room metadata (host, creation time)

room:{roomId}:participants — Hash of userId to role, mute state, join time

room:{roomId}:requests — Hash of pending speaker requests

This gives us atomic operations on participant state, automatic expiry capabilities, and the ability to inspect live room state with redis-cli for debugging. It also means our backend can scale horizontally — any instance can serve any Room.

The Architecture at a Glance

Here is the full picture of how everything fits together:

DigitalOcean Droplet — Runs LiveKit SFU in Docker, with Caddy for TLS. Handles all WebRTC audio transport.

Mention Backend (App Platform) — Token generation, room lifecycle, speaker management, Socket.IO /rooms namespace.

Redis — Room state persistence, Socket.IO adapter for multi-instance support.

Clients (Expo React Native + Web) — Dual connection: Socket.IO for control events, LiveKit SDK for audio tracks.

The separation of concerns is clean. Socket.IO never touches audio data. LiveKit never touches application logic. Redis bridges them by storing the authoritative room state that both layers reference.

Agora: Rooms With Its Own Front Door

Just as Messenger lives inside Facebook and as its own standalone app, Rooms is built into Mention and also exists as Agora — a dedicated app at agora.mention.earth. Same infrastructure, same rooms, same community. Whether you open Rooms inside Mention or launch Agora directly, you are in the same ecosystem. Agora simply gives Rooms a focused experience built entirely around live audio, for users who want to jump straight in.

What We Are Building Next

Rooms is launching today, but we are just getting started. Here is what is on our roadmap:

Recording and Playback — Record your Room and let people listen later. LiveKit supports egress (recording) natively, and we plan to integrate it so hosts can opt into recording. Imagine turning every Room into an on-demand podcast episode.

Live Captions — Real-time transcription powered by AI. Follow along with text even when you cannot listen with audio. This will make Rooms accessible to deaf and hard-of-hearing users, and useful in noise-sensitive environments.

Co-hosting — Invite another user as a co-host who can manage speakers and moderate the room alongside you. Essential for larger Rooms where a single host cannot manage everything alone.

Reactions — Listeners can send emoji reactions that float across the screen. A lightweight way to participate without speaking. Think applause, laughter, and raised hands — all animated in real-time.

Scheduled Rooms with Reminders — Schedule a Room for the future and let your followers set reminders so they do not miss it. We will send push notifications when the Room goes live.

Room Analytics — Detailed stats for hosts: peak listeners, total joins, average listen duration, engagement over time. Understand your audience and optimize your content.

Clips — Highlight and share short audio clips from Rooms. Let your best moments live beyond the live session.

Try It Now

Rooms is available today inside Mention at mention.earth and as the standalone Agora app at agora.mention.earth. Create your first Room, go live, and start talking. We can not wait to hear what you build with it.

If you are a developer interested in how we built this, check out the Oxy ecosystem at oxy.so — all of our infrastructure is built in-house, from the backend services to the real-time audio pipeline.

Welcome to the future of conversation on Mention. Welcome to Rooms.

The Biggest Feature Drop in Mention History

Today we are incredibly excited to announce Rooms — live audio rooms built directly into Mention. Think of it like Messenger inside Facebook: Rooms is a core part of the Mention experience, and it also has its own standalone app — Agora, available at agora.mention.earth. This is the single largest feature we have ever shipped, and it fundamentally changes what Mention is: from a social platform where you read and write, to one where you can talk and listen in real-time.

Rooms lets any user create a live audio room, invite speakers, and broadcast to an unlimited audience of listeners. Think of it as your own podcast studio, town hall, or casual hangout — all inside Mention, or through the dedicated Agora app.

We did not take shortcuts. We built this on top of WebRTC with a self-hosted Selective Forwarding Unit (SFU), running on our own infrastructure. No third-party audio APIs. No usage-based pricing that would force us to limit your conversations. Just raw, low-latency, high-quality audio — from our servers to your ears.

Let us dive into everything.

What Are Rooms?

A Room is a live audio room hosted by a Mention or Agora user. When you create a Room, you become the host. You give it a title, optionally a topic and description, and then you go live. That is it — you are broadcasting.

Other users can join your Room as listeners. They hear everything the speakers say in real-time, with sub-second latency. If a listener wants to speak, they can raise their hand (request to speak), and the host can approve or deny the request.

Once approved, a listener becomes a speaker. Speakers can unmute their microphone and talk to the entire room. The host can also remove speakers at any time, returning them to listener status.

The entire flow is designed to feel natural and effortless:

Create — Tap the "+" button on the Rooms screen. Give your Room a title and topic.

Go Live — Hit "Start Now" and you are broadcasting. A LiveKit room is created on our SFU, and your audio session begins.

Invite and Share — Share your Room. It appears in the Rooms directory for anyone to join.

Manage — Approve or deny speaker requests. Mute yourself. Remove speakers. You are in full control.

End — When you are done, end the Room. The LiveKit room is torn down, Redis state is cleaned up, and the Room is archived.

How It Works Under the Hood

This is where it gets interesting. We built Rooms with a dual-layer architecture that separates the control plane from the media plane.

Layer 1: Socket.IO — The Control Plane

Socket.IO handles everything that is not audio. When you join a Room, your client establishes a WebSocket connection to our backend through the /rooms Socket.IO namespace. This connection manages:

Participant tracking — Who is in the room, their roles (host, speaker, listener), and their mute state

Speaker requests — The raise-hand, approve/deny, role-change flow

Room lifecycle — Start, end, and participant join/leave events

Real-time UI updates — Mute indicators, participant count, speaker list changes

All room state is persisted in Redis, not in-memory. This means if our backend process restarts, your Room survives. It also means we can scale horizontally — multiple backend instances can serve the same Room through Redis pub/sub.

Layer 2: LiveKit SFU — The Media Plane

Audio is handled entirely by LiveKit, an open-source WebRTC Selective Forwarding Unit that we self-host on our own DigitalOcean infrastructure.

When a Room goes live, our backend creates a LiveKit room via the Room Service API. When a client joins, it requests a LiveKit access token from our backend (POST /api/rooms/:id/token). The token encodes the user identity, the room name, and their permissions — can they publish audio? Can they subscribe?

The client then connects directly to the LiveKit SFU over WebRTC. Audio tracks flow peer-to-SFU, not peer-to-peer. This is critical for scalability — in a peer-to-peer model, a room with 50 listeners would require the speaker to upload 50 separate audio streams. With an SFU, the speaker uploads once, and LiveKit distributes to all subscribers.

Here is what LiveKit handles for us:

WebRTC negotiation — ICE candidates, DTLS handshake, codec selection

Echo cancellation and noise suppression — Built into the WebRTC stack

Adaptive bitrate — Audio quality adjusts based on network conditions

Track publish/subscribe — Speakers publish their mic track; listeners subscribe automatically

Bandwidth management — Dynacast ensures we only forward tracks that participants are actually consuming

Why Self-Hosted?

This was never a question for us. At Oxy, we believe your conversations belong to you — not to a third-party provider who might log, analyze, or monetize your audio data. Self-hosting is a direct extension of the values we have held since day one: privacy, independence, and trust.

When you speak in a Room, your voice travels from your device to our SFU and back to the listeners. That is it. No third-party processors in the middle. No audio data leaving our infrastructure. No external company with access to what you say. We do not want to depend on anyone else when it comes to protecting our users, and we refuse to put your privacy in someone else’s hands.

Beyond privacy, self-hosting gives us:

True independence — We do not rely on any external service that could change its terms, raise prices, or shut down. Our infrastructure is ours. If a hosted provider decided to discontinue their service tomorrow, it would not affect a single Room on Mention.

Cost control — Hosted WebRTC services charge per-participant-minute. For a social platform where Rooms can run for hours with dozens of participants, costs would escalate quickly and could force us to limit your conversations. Our self-hosted LiveKit instance runs on our own DigitalOcean infrastructure at a predictable cost.

Full control — We configure everything: room timeouts, codec preferences, port ranges, TLS termination. If we need to tune performance or respond to an issue, we change a config file — not a support ticket. We answer to our users, not to a vendor.

We could have taken the easy route and plugged in a hosted API. But easy and right are not always the same thing. Our users deserve better, and self-hosting is how we deliver on that promise.

Our LiveKit instance runs as a Docker container behind Caddy (for automatic TLS via Let us Encrypt) on a dedicated droplet at livekit.mention.earth. UDP ports 50000-50200 are open for WebRTC media transport, with TCP fallback through the TURN server.

Role-Based Permissions

Rooms has a layered permission system that works across both Socket.IO and LiveKit:

Host

The Room creator. Has full control: can start/end the Room, approve/deny speaker requests, remove speakers, and of course speak. Their LiveKit token grants canPublish: true with canPublishSources: [MICROPHONE].

Speaker

A participant promoted by the host. Can unmute and publish audio. When the host approves a speaker request, two things happen simultaneously:

Socket.IO broadcasts the role change to all participants (UI update)

Our backend calls updateParticipantPermissions() on LiveKit Room Service API, granting the user publish rights in the LiveKit room

This dual update ensures the UI and the media layer stay in sync. The speaker can immediately unmute and start talking.

Listener

The default role. Can hear all speakers but cannot publish audio. Their LiveKit token has canPublish: false. Even if they tried to publish a track client-side, LiveKit would reject it at the server level. Security is enforced server-side, not just in the UI.

Speaker Permission Modes

When creating a Room, the host can choose who is allowed to request to speak:

Everyone — Any participant can raise their hand

Followers — Only users who follow the host can request

Invited Only — Only users explicitly invited by the host can speak

Rooms in Your Feed

Rooms are not isolated in a separate tab. They are woven into the fabric of Mention. When you create a Room, you can attach it to a post — just like you would attach an image, poll, or article.

Your followers will see a Room card in their feed. If the Room is live, the card pulses with a live indicator. Tapping the card joins the Room immediately. If it is scheduled, the card shows the scheduled time.

This was a deliberate design decision. We did not want Rooms to be a feature you have to go looking for. We wanted them to surface naturally in the content you are already browsing. A live Room from someone you follow should feel as natural as seeing their latest post.

The post attachment system supports the full Room lifecycle:

Scheduled — Card shows date/time, acts as an announcement

Live — Card shows participant count, pulsing indicator, "Join" action

Ended — Card shows it has ended (future: will link to recording)

Cross-Platform from Day One

Rooms works on web, iOS, and Android from launch — whether you use it inside Mention or through the standalone Agora app. The LiveKit client SDK handles platform differences:

Web — Uses the browser WebRTC implementation. Remote audio tracks are attached to audio DOM elements for playback. We handle autoplay policies and element cleanup.

iOS and Android — Uses @livekit/react-native with react-native-webrtc under the hood. Audio session management is handled natively — we start the audio session when connecting and stop it on disconnect.

The UI is the same bottom sheet experience across all platforms, built with our existing sheet system. Open a Room, see the speakers, see the listeners, tap to mute, tap to raise your hand. It just works.

Redis-Backed State

In the early prototype, room state lived in a JavaScript Map in the backend process. If the process restarted, all active Rooms lost their participant lists. Not acceptable for production.

We migrated all room state to Redis, which we already use for Socket.IO adapter (multi-instance pub/sub) and caching. The key schema is clean:

room:{roomId} — Room metadata (host, creation time)

room:{roomId}:participants — Hash of userId to role, mute state, join time

room:{roomId}:requests — Hash of pending speaker requests

This gives us atomic operations on participant state, automatic expiry capabilities, and the ability to inspect live room state with redis-cli for debugging. It also means our backend can scale horizontally — any instance can serve any Room.

The Architecture at a Glance

Here is the full picture of how everything fits together:

DigitalOcean Droplet — Runs LiveKit SFU in Docker, with Caddy for TLS. Handles all WebRTC audio transport.

Mention Backend (App Platform) — Token generation, room lifecycle, speaker management, Socket.IO /rooms namespace.

Redis — Room state persistence, Socket.IO adapter for multi-instance support.

Clients (Expo React Native + Web) — Dual connection: Socket.IO for control events, LiveKit SDK for audio tracks.

The separation of concerns is clean. Socket.IO never touches audio data. LiveKit never touches application logic. Redis bridges them by storing the authoritative room state that both layers reference.

Agora: Rooms With Its Own Front Door

Just as Messenger lives inside Facebook and as its own standalone app, Rooms is built into Mention and also exists as Agora — a dedicated app at agora.mention.earth. Same infrastructure, same rooms, same community. Whether you open Rooms inside Mention or launch Agora directly, you are in the same ecosystem. Agora simply gives Rooms a focused experience built entirely around live audio, for users who want to jump straight in.

What We Are Building Next

Rooms is launching today, but we are just getting started. Here is what is on our roadmap:

Recording and Playback — Record your Room and let people listen later. LiveKit supports egress (recording) natively, and we plan to integrate it so hosts can opt into recording. Imagine turning every Room into an on-demand podcast episode.

Live Captions — Real-time transcription powered by AI. Follow along with text even when you cannot listen with audio. This will make Rooms accessible to deaf and hard-of-hearing users, and useful in noise-sensitive environments.

Co-hosting — Invite another user as a co-host who can manage speakers and moderate the room alongside you. Essential for larger Rooms where a single host cannot manage everything alone.

Reactions — Listeners can send emoji reactions that float across the screen. A lightweight way to participate without speaking. Think applause, laughter, and raised hands — all animated in real-time.

Scheduled Rooms with Reminders — Schedule a Room for the future and let your followers set reminders so they do not miss it. We will send push notifications when the Room goes live.

Room Analytics — Detailed stats for hosts: peak listeners, total joins, average listen duration, engagement over time. Understand your audience and optimize your content.

Clips — Highlight and share short audio clips from Rooms. Let your best moments live beyond the live session.

Try It Now

Rooms is available today inside Mention at mention.earth and as the standalone Agora app at agora.mention.earth. Create your first Room, go live, and start talking. We can not wait to hear what you build with it.

If you are a developer interested in how we built this, check out the Oxy ecosystem at oxy.so — all of our infrastructure is built in-house, from the backend services to the real-time audio pipeline.

Welcome to the future of conversation on Mention. Welcome to Rooms.

Outreach & Initiatives

Services

Innovation

Sustainability & Impact

Our mission is to fuel innovation with purpose, providing the tools and support to build tools that solve global challenges.

Made with 💚 in the 🌎 by Oxy.

Corporate Information

Outreach & Initiatives

Services

Innovation

Sustainability & Impact

Our mission is to fuel innovation with purpose, providing the tools and support to build tools that solve global challenges.

Made with 💚 in the 🌎 by Oxy.

Corporate Information