Sunday, February 15, 2026

Alia’s Voice Just Got Expressive and It Can Use Tools While Talking

Alia’s Voice Just Got Expressive and It Can Use Tools While Talking

Alia’s Voice Just Got Expressive and It Can Use Tools While Talking

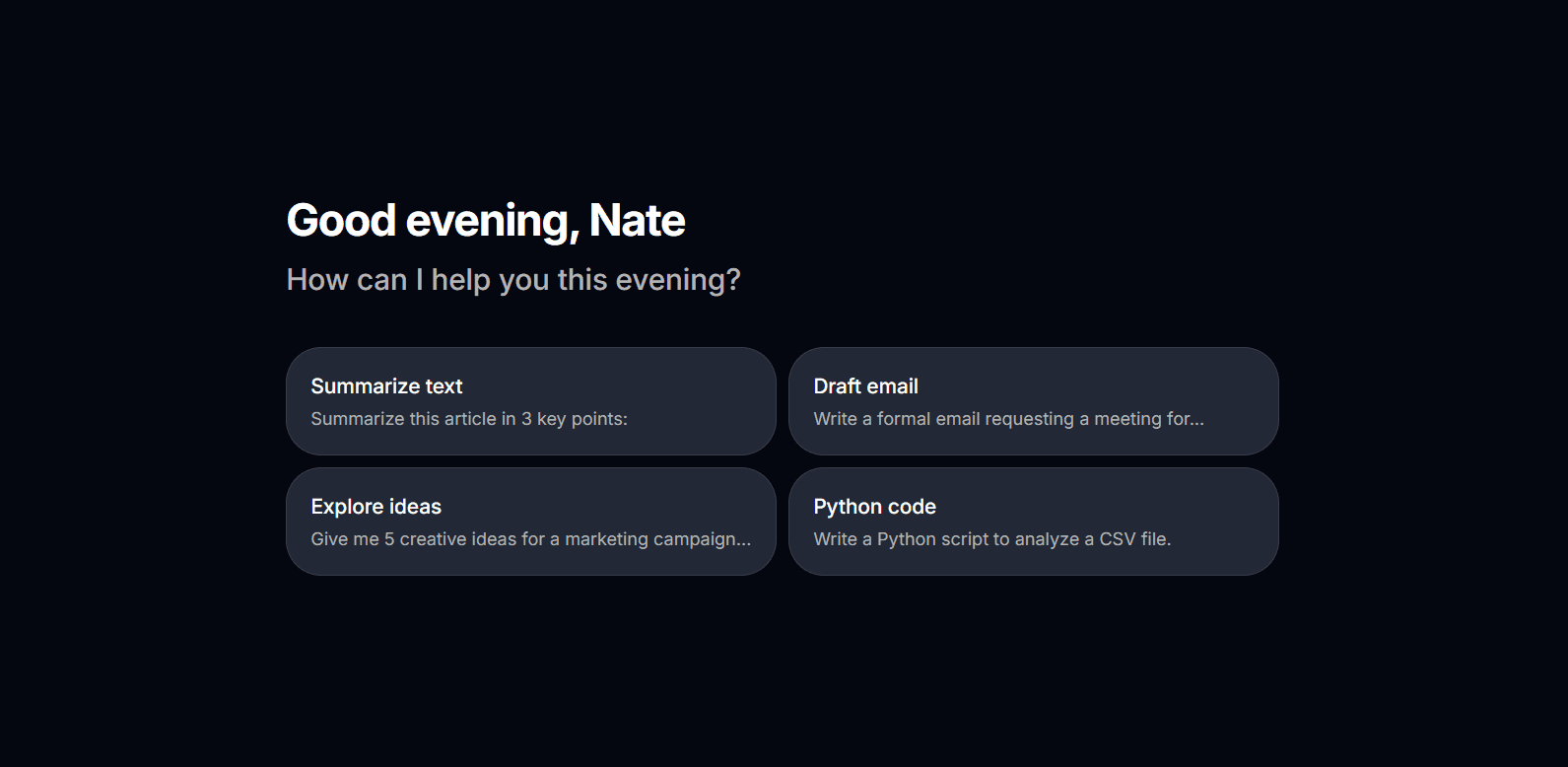

When we shipped voice mode two weeks ago, it was already a step change — real-time conversations with Alia powered by LiveKit, with natural turn-taking and interruption support. Today, voice mode gets smarter and more capable with two updates that make talking to Alia feel genuinely different.

Voice Mode Just Got a Personality

Alia's voice is no longer monotone. With vocal expressiveness, Alia now adapts its tone, pacing, and emotional delivery to match the context of the conversation.

Ask a serious question and the response is measured and thoughtful. Brainstorm an idea and the energy picks up. Share something exciting and Alia matches that enthusiasm. It's a subtle shift, but it makes voice conversations feel dramatically more natural — less like talking to a system, more like talking to someone who's actually listening.

This isn't a gimmick. Expressive voice is about reducing the cognitive gap between how you communicate with people and how you communicate with AI. When the voice matches the intent, you stay in flow longer and get more out of the conversation.

Use Your Tools, Hands-Free

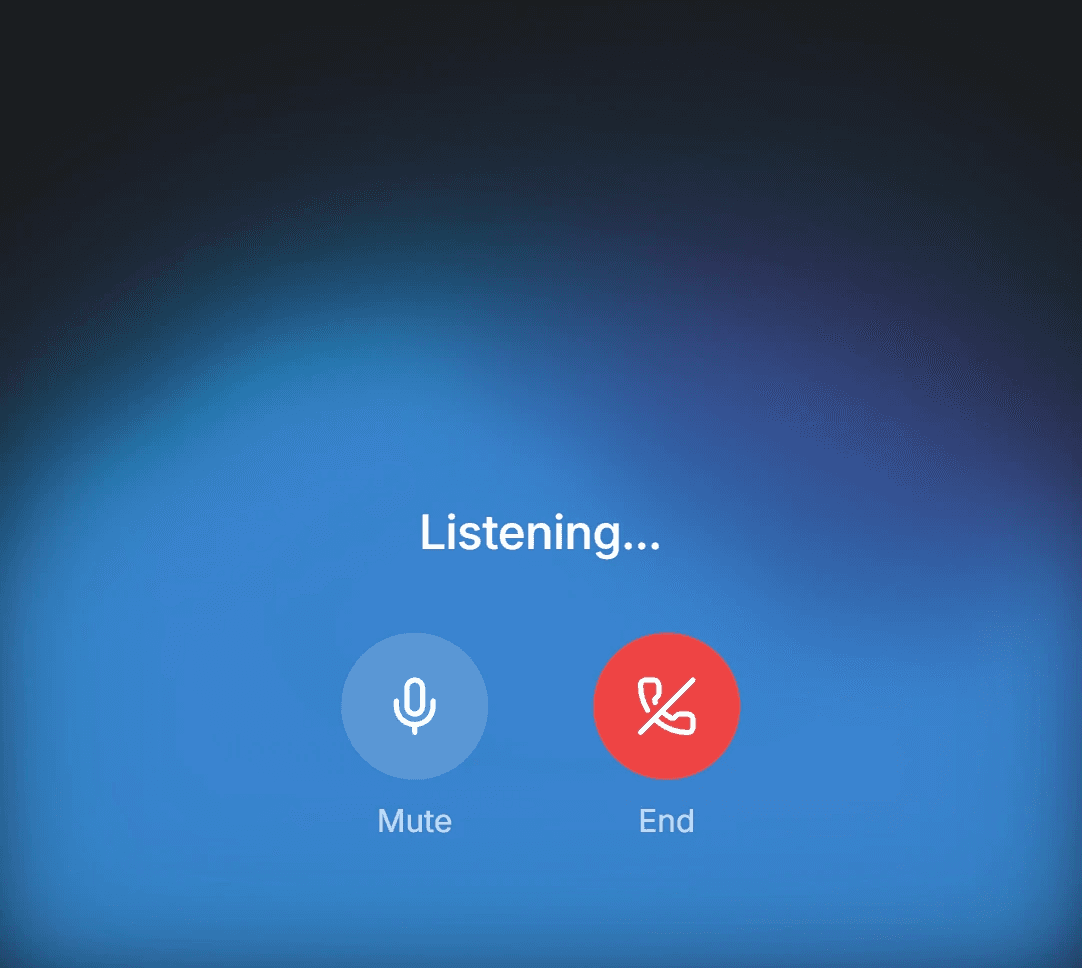

Voice mode can now execute tools while you're talking. Ask Alia to search the web, look something up, run a code snippet, or pull data — and it happens server-side, mid-conversation, without you ever switching to text.

Here's what that looks like in practice:

"Search for the latest news on renewable energy" — Alia runs the search and reads back the highlights

"What's the weather in Barcelona this weekend?" — Tool fires, result streams back naturally

"Run this Python function and tell me the output" — Code executes server-side, Alia walks you through the result

Previously, tool use required text input. Now the entire loop — your voice request, tool execution, and spoken response — happens seamlessly in real-time. This is what hands-free AI actually looks like.

What's Next

Voice mode is still early. We're working on multi-turn memory within voice sessions, richer tool integrations, and continued improvements to latency and naturalness. If you haven't tried voice mode yet, open alia.onl and tap the microphone — it's available on all plans.

When we shipped voice mode two weeks ago, it was already a step change — real-time conversations with Alia powered by LiveKit, with natural turn-taking and interruption support. Today, voice mode gets smarter and more capable with two updates that make talking to Alia feel genuinely different.

Voice Mode Just Got a Personality

Alia's voice is no longer monotone. With vocal expressiveness, Alia now adapts its tone, pacing, and emotional delivery to match the context of the conversation.

Ask a serious question and the response is measured and thoughtful. Brainstorm an idea and the energy picks up. Share something exciting and Alia matches that enthusiasm. It's a subtle shift, but it makes voice conversations feel dramatically more natural — less like talking to a system, more like talking to someone who's actually listening.

This isn't a gimmick. Expressive voice is about reducing the cognitive gap between how you communicate with people and how you communicate with AI. When the voice matches the intent, you stay in flow longer and get more out of the conversation.

Use Your Tools, Hands-Free

Voice mode can now execute tools while you're talking. Ask Alia to search the web, look something up, run a code snippet, or pull data — and it happens server-side, mid-conversation, without you ever switching to text.

Here's what that looks like in practice:

"Search for the latest news on renewable energy" — Alia runs the search and reads back the highlights

"What's the weather in Barcelona this weekend?" — Tool fires, result streams back naturally

"Run this Python function and tell me the output" — Code executes server-side, Alia walks you through the result

Previously, tool use required text input. Now the entire loop — your voice request, tool execution, and spoken response — happens seamlessly in real-time. This is what hands-free AI actually looks like.

What's Next

Voice mode is still early. We're working on multi-turn memory within voice sessions, richer tool integrations, and continued improvements to latency and naturalness. If you haven't tried voice mode yet, open alia.onl and tap the microphone — it's available on all plans.

When we shipped voice mode two weeks ago, it was already a step change — real-time conversations with Alia powered by LiveKit, with natural turn-taking and interruption support. Today, voice mode gets smarter and more capable with two updates that make talking to Alia feel genuinely different.

Voice Mode Just Got a Personality

Alia's voice is no longer monotone. With vocal expressiveness, Alia now adapts its tone, pacing, and emotional delivery to match the context of the conversation.

Ask a serious question and the response is measured and thoughtful. Brainstorm an idea and the energy picks up. Share something exciting and Alia matches that enthusiasm. It's a subtle shift, but it makes voice conversations feel dramatically more natural — less like talking to a system, more like talking to someone who's actually listening.

This isn't a gimmick. Expressive voice is about reducing the cognitive gap between how you communicate with people and how you communicate with AI. When the voice matches the intent, you stay in flow longer and get more out of the conversation.

Use Your Tools, Hands-Free

Voice mode can now execute tools while you're talking. Ask Alia to search the web, look something up, run a code snippet, or pull data — and it happens server-side, mid-conversation, without you ever switching to text.

Here's what that looks like in practice:

"Search for the latest news on renewable energy" — Alia runs the search and reads back the highlights

"What's the weather in Barcelona this weekend?" — Tool fires, result streams back naturally

"Run this Python function and tell me the output" — Code executes server-side, Alia walks you through the result

Previously, tool use required text input. Now the entire loop — your voice request, tool execution, and spoken response — happens seamlessly in real-time. This is what hands-free AI actually looks like.

What's Next

Voice mode is still early. We're working on multi-turn memory within voice sessions, richer tool integrations, and continued improvements to latency and naturalness. If you haven't tried voice mode yet, open alia.onl and tap the microphone — it's available on all plans.

Outreach & Initiatives

Services

Innovation

Sustainability & Impact

Our mission is to fuel innovation with purpose, providing the tools and support to build tools that solve global challenges.

Made with 💚 in the 🌎 by Oxy.

Corporate Information

Outreach & Initiatives

Services

Innovation

Sustainability & Impact

Our mission is to fuel innovation with purpose, providing the tools and support to build tools that solve global challenges.

Made with 💚 in the 🌎 by Oxy.

Corporate Information